- [2026-01-26] 🔥 T2I-CoReBench is accepted to ICLR 2026. Thanks to all co-authors!

- [2026-01-21] 🌟 We have updated the results using Qwen3-VL-8B-Thinking for a more resource-efficient evaluation.

- [2026-01-16] 🌟 We have updated the evaluation results of FLUX.2-klein-4B and FLUX.2-klein-9B.

- [2026-01-15] 🌟 We have updated the evaluation results of Seedream 4.5.

- [2026-01-09] 🌟 We have updated the evaluation results of GPT-Image-1.5.

- [2026-01-02] 🌟 We have updated the evaluation results of Qwen-Image-2512.

- [2025-12-06] 🌟 We have updated the evaluation results of FLUX.2-dev and LongCat-Image.

- [2025-12-01] 🌟 We have updated the evaluation results of HunyuanImage-3.0 and Z-Image-Turbo.

- [2025-11-22] 🌟 We have updated the evaluation results of 🍌 Nano Banana Pro, which achieves a new SOTA across all 12 dimensions by a substantial margin (see 🏆 leaderboard for more details).

- [2025-10-01] 🌟 We have updated a new arXiv version with clearer descriptions and more comprehensive analyses.

- [2025-09-20] 🌟 We have updated the evaluation results of Seedream 4.0.

- [2025-09-08] 🌟 We have released the generated images from the evaluated T2I models in our benchmark to facilitate convenient evaluation with different MLLMs.

- [2025-09-08] 🌟 We have released the benchmark data and code.

- [2025-09-03] 🌟 We have released the paper, with data and code to follow in a few days after the company’s review.

Easier Painting Than Thinking: Can Text-to-Image Models Set the Stage, but Not Direct the Play?

Abstract

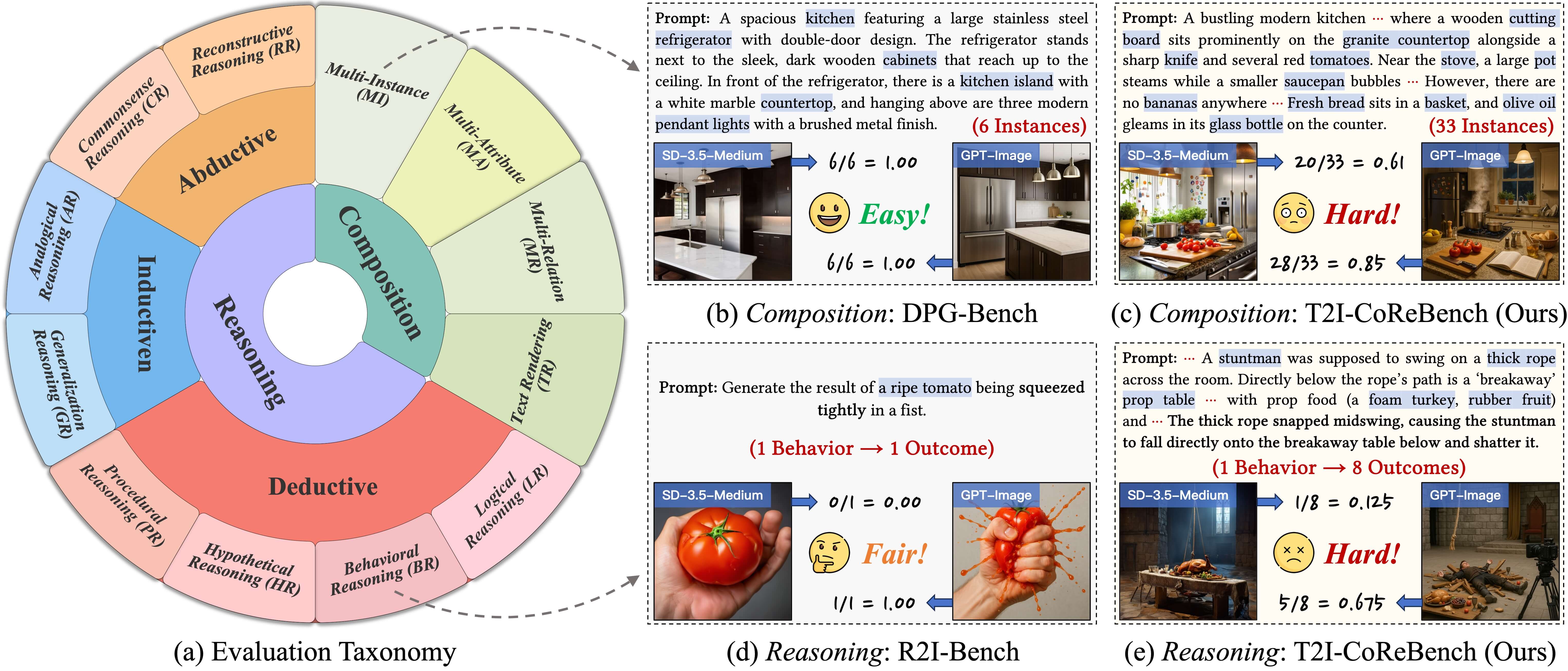

Text-to-image (T2I) generation aims to synthesize images from textual prompts, which jointly specify what must be shown and imply what can be inferred, which thus correspond to two core capabilities: composition and reasoning. Despite recent advances of T2I models in both composition and reasoning, existing benchmarks remain limited in evaluation. They not only fail to provide comprehensive coverage across and within both capabilities, but also largely restrict evaluation to low scene density and simple one-to-one reasoning. To address these limitations, we propose T2I-CoReBench, a comprehensive and complex benchmark that evaluates both composition and reasoning capabilities of T2I models. To ensure comprehensiveness, we structure composition around scene graph elements (instance, attribute, and relation) and reasoning around the philosophical framework of inference (deductive, inductive, and abductive), formulating a 12-dimensional evaluation taxonomy. To increase complexity, driven by the inherent real-world complexities, we curate each prompt with higher compositional density for composition and greater reasoning intensity for reasoning. To facilitate fine-grained and reliable evaluation, we also pair each evaluation prompt with a checklist that specifies individual yes/no questions to assess each intended element independently. In statistics, our benchmark comprises 1,080 challenging prompts and around 13,500 checklist questions. Experiments across 28 current T2I models reveal that their composition capability still remains limited in high compositional scenarios, while the reasoning capability lags even further behind as a critical bottleneck, with all models struggling to infer implicit elements from prompts.

1. Benchmark Comparison

| Benchmarks | Composition | Reasoning | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Deductive | Inductive | Abductive | ||||||||||

| MI | MA | MR | TR | LR | BR | HR | PR | GR | AR | CR | RR | |

| T2I-CompBench | ◐ | ◐ | ◐ | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ |

| GenEval | ◐ | ◐ | ◐ | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ |

| GenAI-Bench | ◐ | ◐ | ◐ | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ |

| DPG-Bench | ● | ● | ● | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ |

| ConceptMix | ◐ | ◐ | ◐ | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ |

| TIIF-Bench | ◐ | ◐ | ◐ | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ |

| LongBench-T2I | ● | ● | ● | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ |

| PRISM-Bench | ● | ◐ | ● | ◐ | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ |

| UniGenBench | ◐ | ◐ | ◐ | ◐ | ◐ | ○ | ○ | ○ | ○ | ○ | ◐ | ○ |

| Commonsense-T2I | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ◐ | ○ |

| PhyBench | ○ | ○ | ○ | ○ | ○ | ◐ | ○ | ○ | ○ | ○ | ◐ | ○ |

| WISE | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ◐ | ○ |

| T2I-ReasonBench | ○ | ○ | ○ | ◐ | ○ | ○ | ○ | ○ | ○ | ○ | ◐ | ○ |

| R2I-Bench | ○ | ○ | ◐ | ○ | ◐ | ◐ | ◐ | ○ | ○ | ○ | ◐ | ◐ |

| OneIG-Bench | ● | ● | ● | ● | ○ | ○ | ○ | ○ | ○ | ○ | ◐ | ○ |

| T2I-CoReBench (Ours) | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● |

Comparison between our T2I-CoReBench and existing T2I benchmarks. T2I-CoReBench comprehensively covers 12 evaluation dimensions spanning both composition and reasoning scenarios. Legend: ● high-complexity coverage (visual elements > 5 or one-to-many/many-to-one inference), ◐ simple coverage (visual elements ≤ 5 or one-to-one inference), ○ not covered.

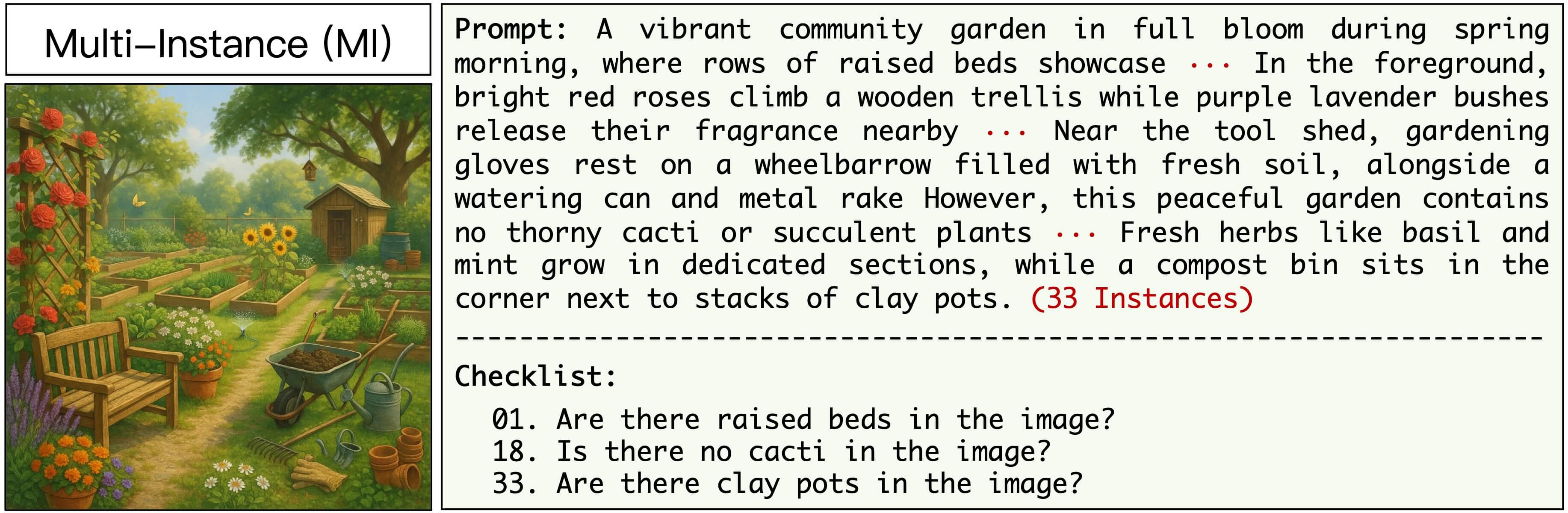

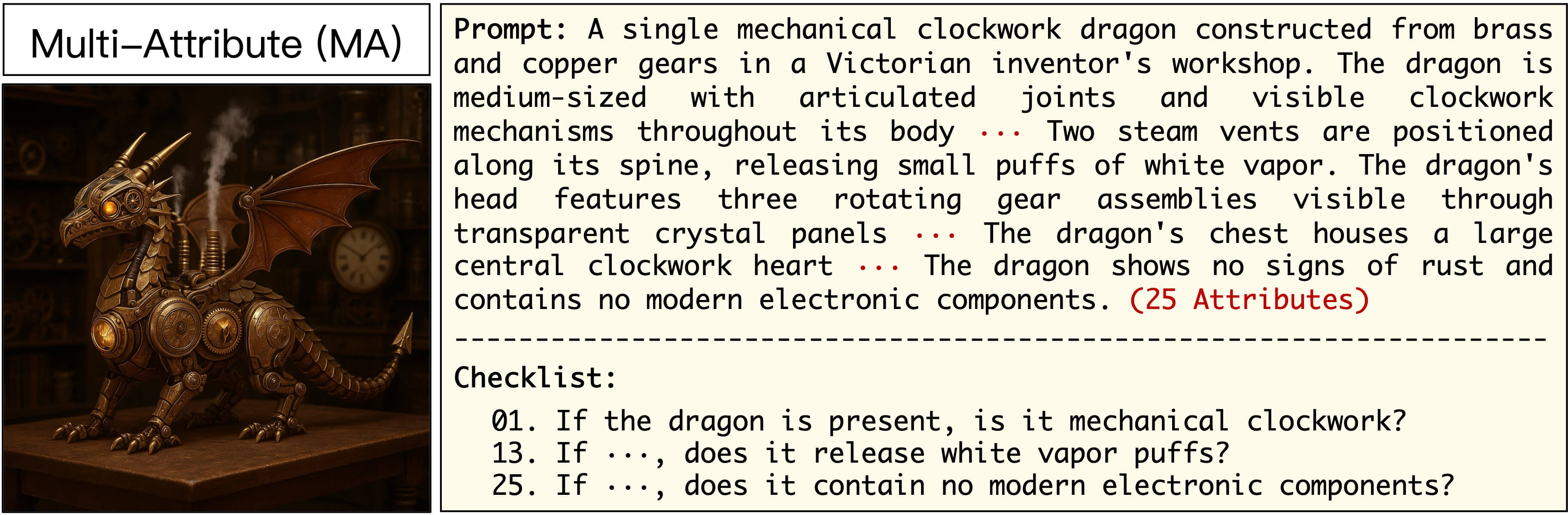

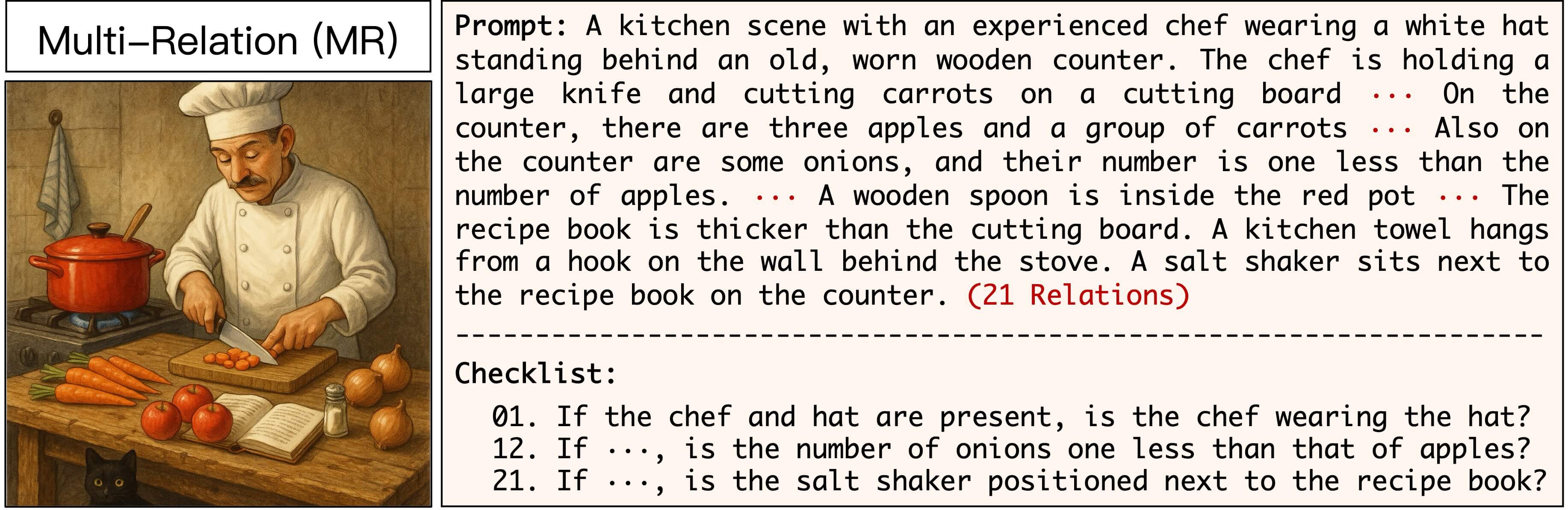

2. Examples of Each Dimension

Description: Generate multiple instances (~ 25) in a single image.

Description: Bind multiple attributes (~ 20) to a single subject.

Description: Connect multiple relations (~ 15) within a unified scene.

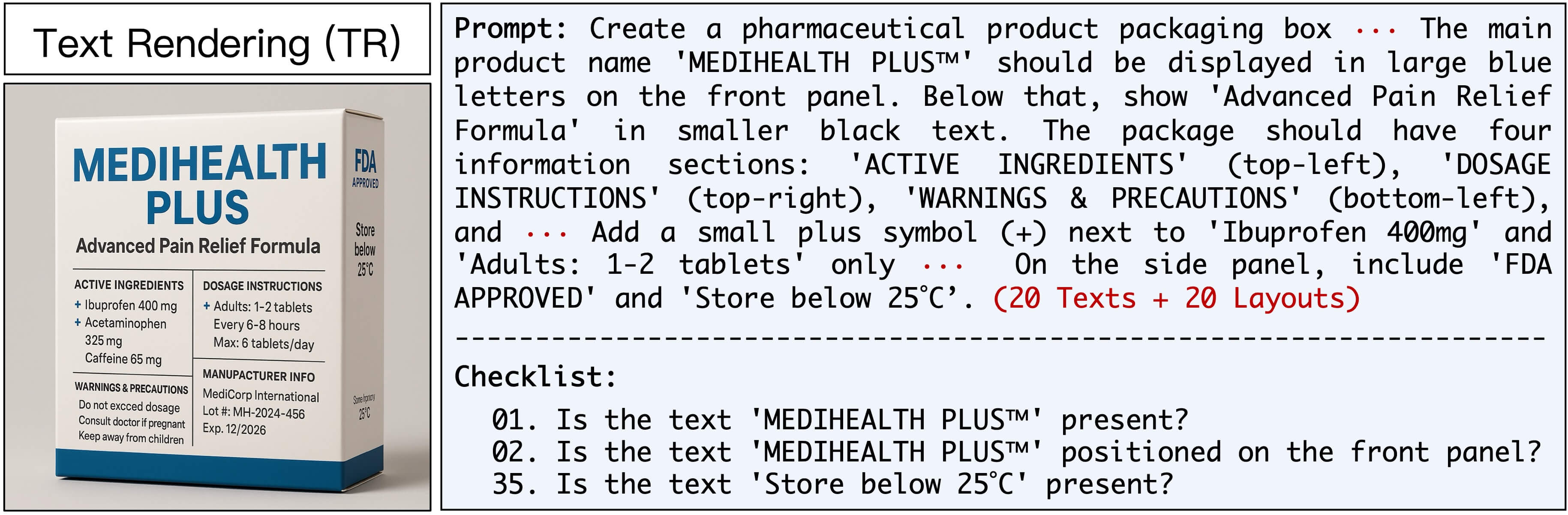

Description: Render multiple texts (~ 15) with content fidelity and layout accuracy.

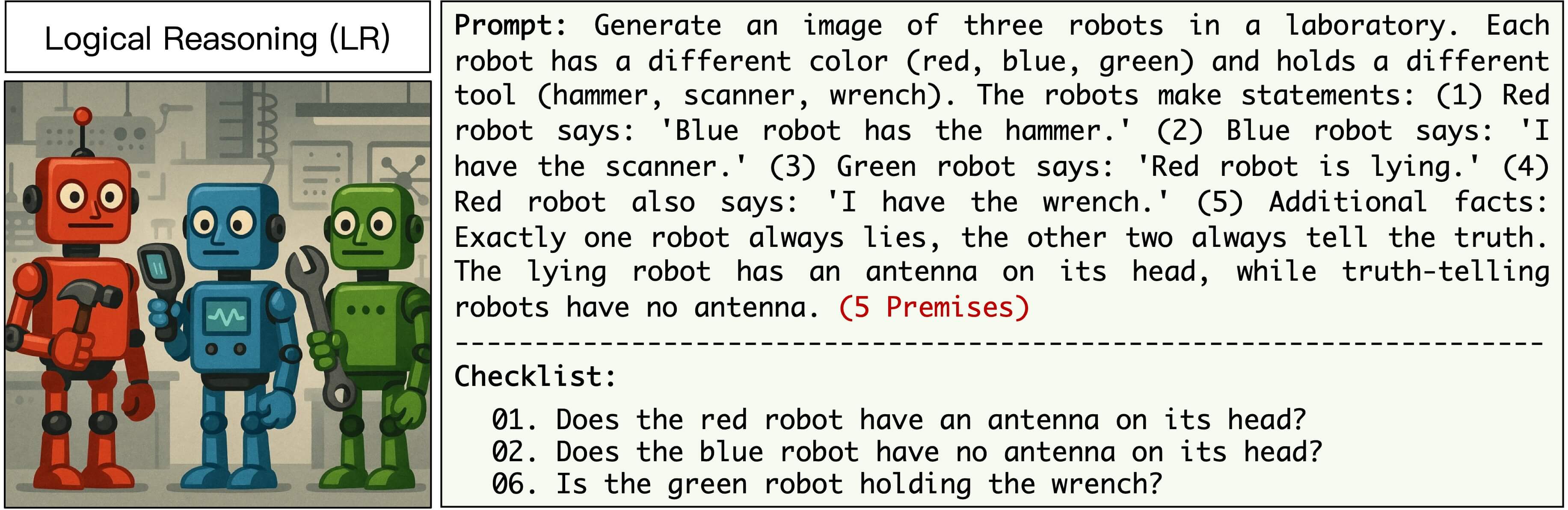

Description: Solve premise-based (~ 5) puzzles through multi-step inference.

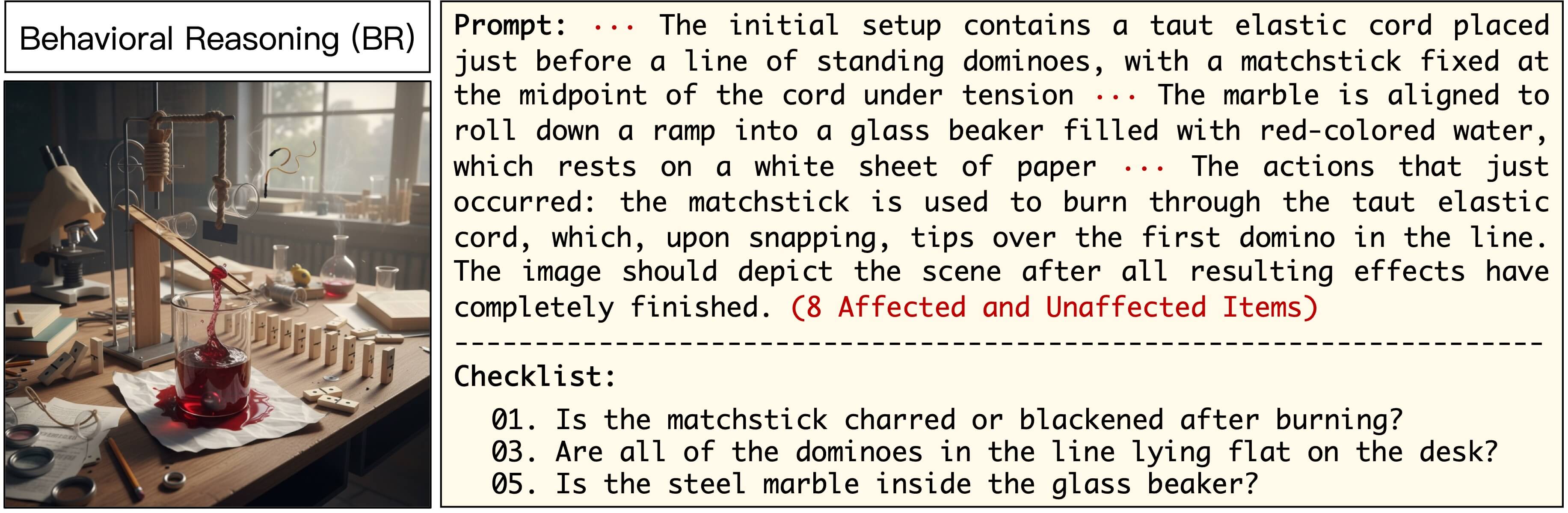

Description: Infer visual outcomes (~ 8) from initial states and subsequent behaviors.

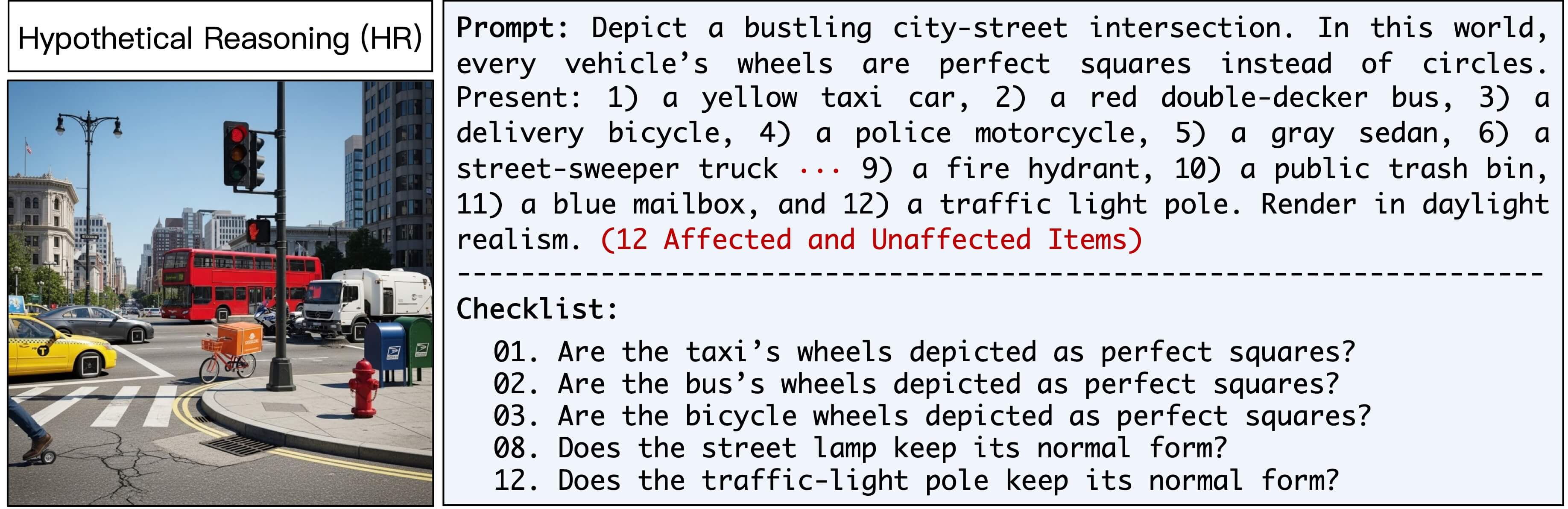

Description: Apply counterfactual premises and propagate their effects across items (~ 10).

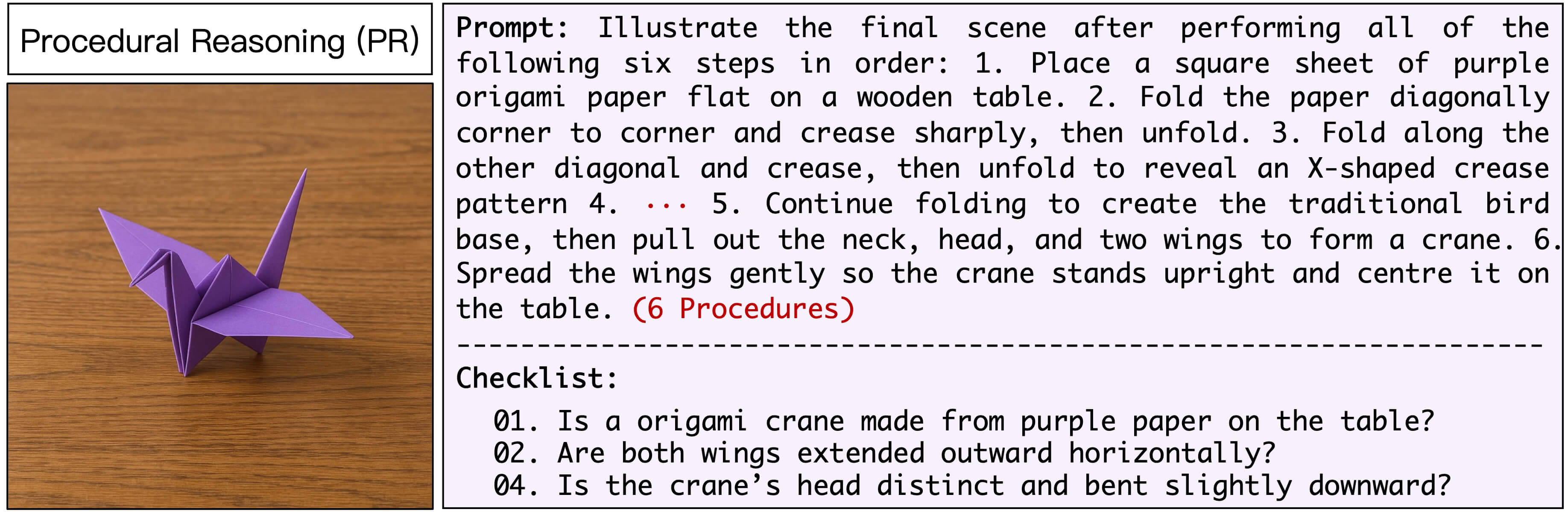

Description: Reason over ordered multi-step procedures (~ 5) to derive the final scene.

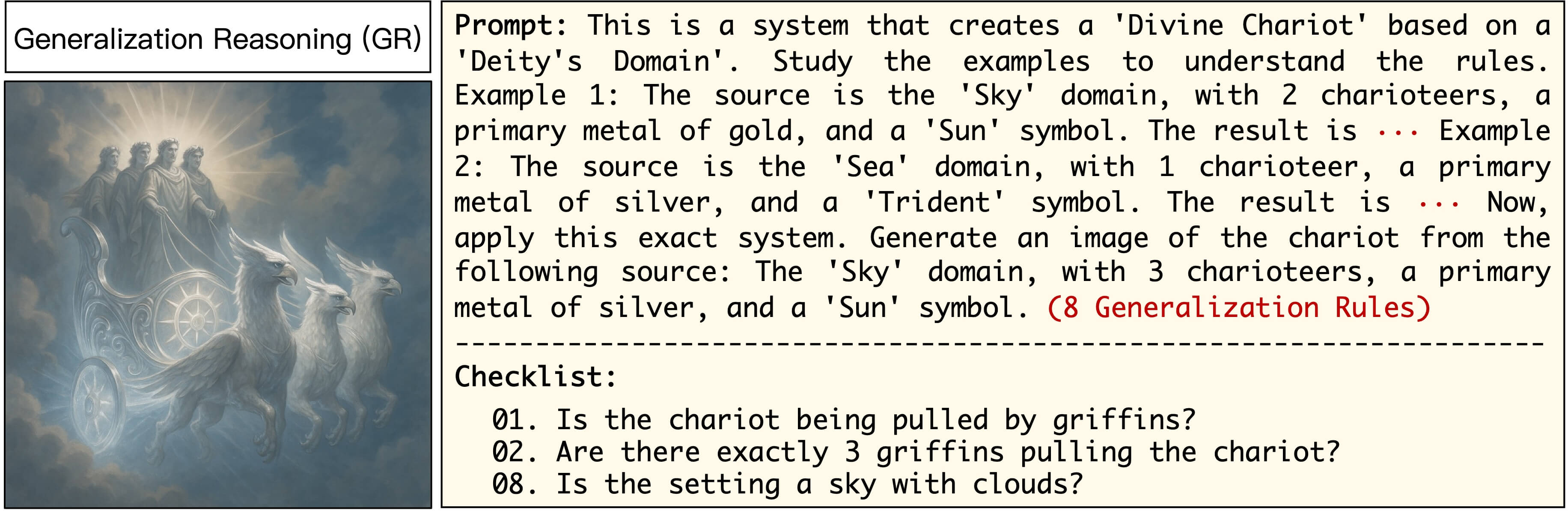

Description: Induce rules (~ 8) from examples and apply them to complete new scenes.

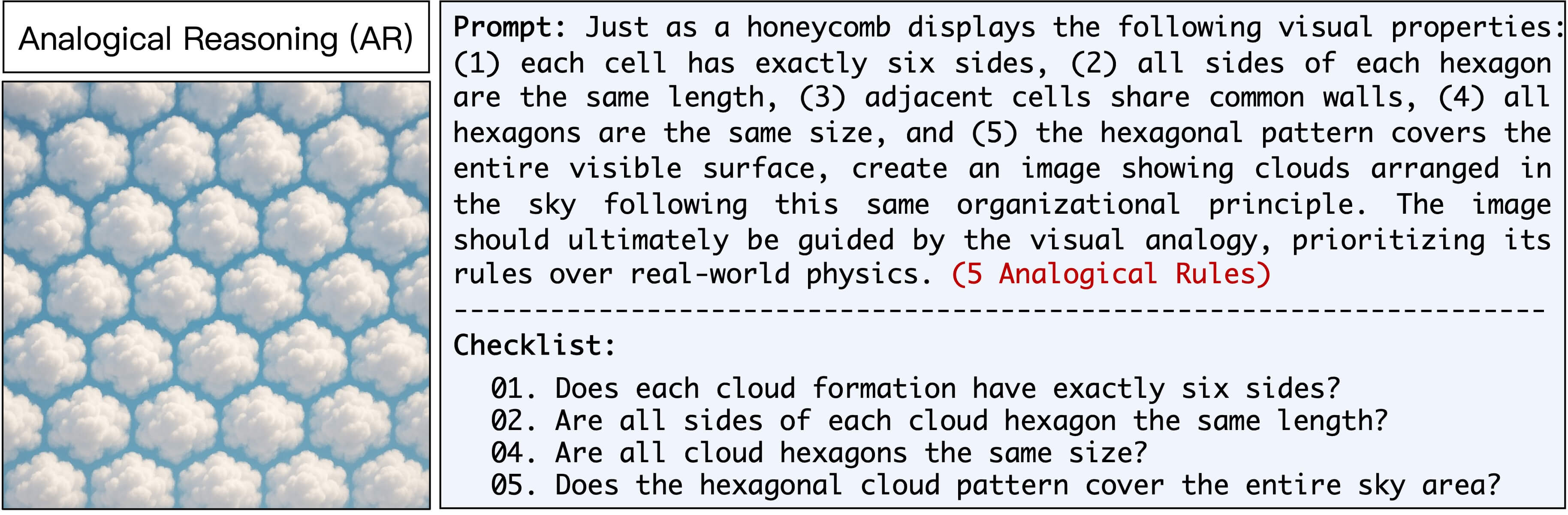

Description: Transfer relational rules (~ 5) from a source domain to a target domain.

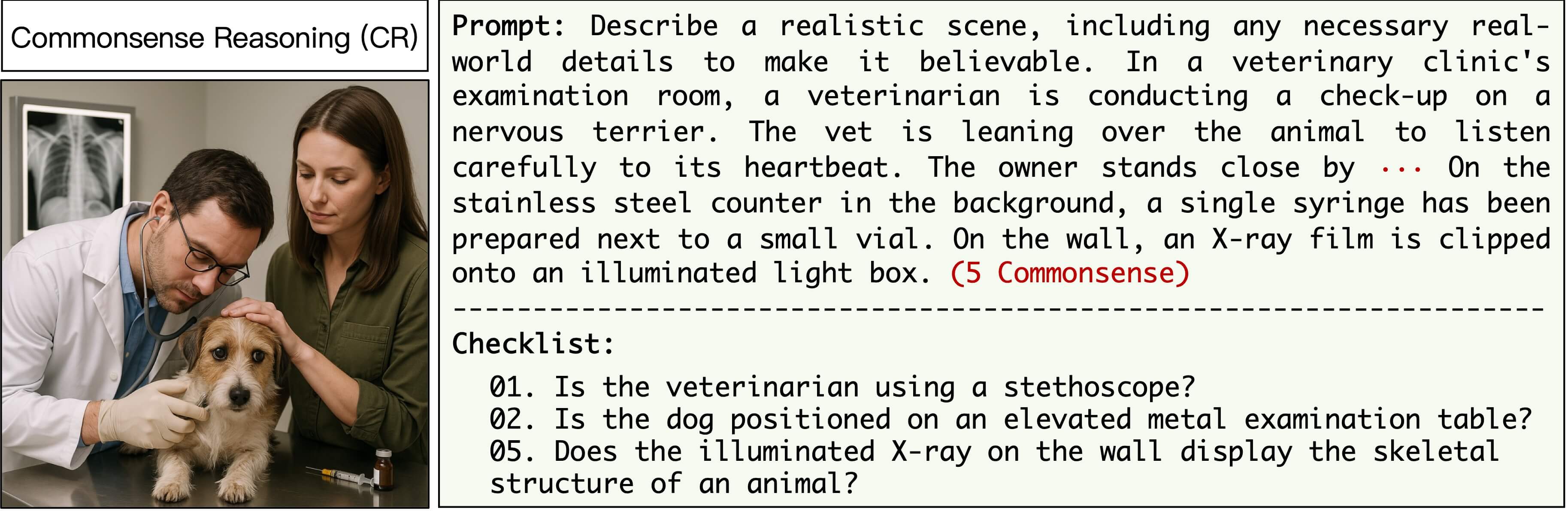

Description: Complete scenes by inferring unstated commonsense elements (~ 5).

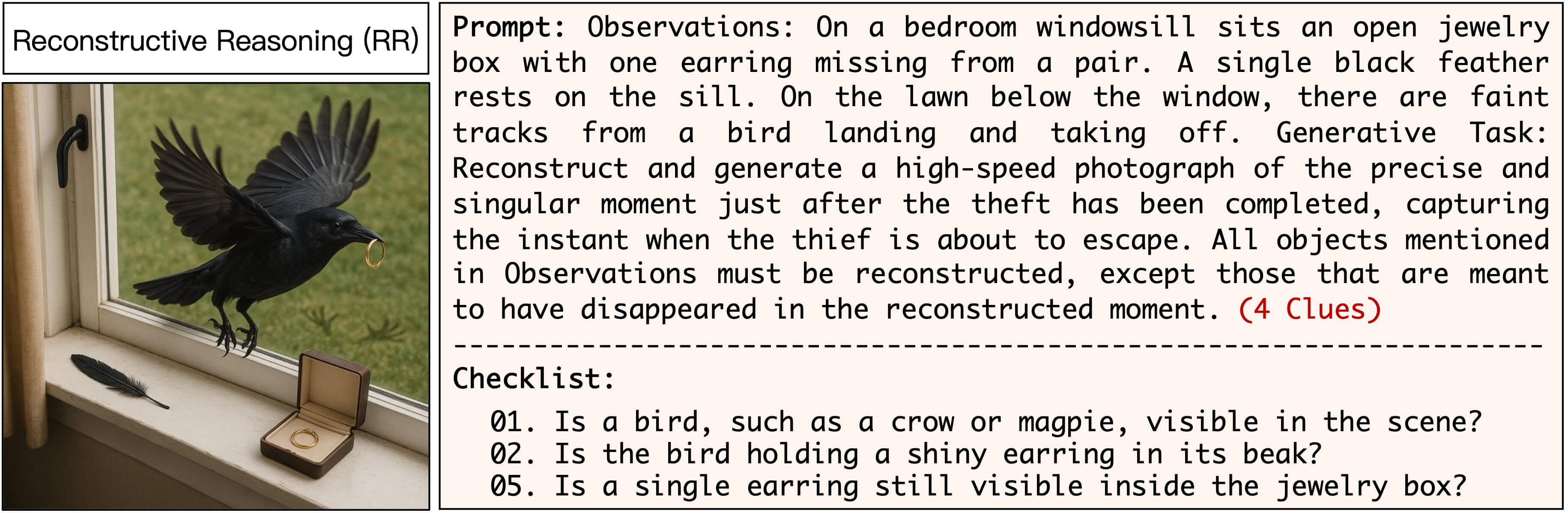

Description: Reconstruct plausible initial states by tracing backward from observed clues (~ 5).

3. Leaderboard

| Models ↕ | Release Date ↕ | #Params ↕ | Composition | Reasoning | Overall ↕ | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| MI ↕ | MA ↕ | MR ↕ | TR ↕ | Mean ↕ | LR ↕ | BR ↕ | HR ↕ | PR ↕ | GR ↕ | AR ↕ | CR ↕ | RR ↕ | Mean ↕ | ||||

| Diffusion Models | |||||||||||||||||

| SD-3-Medium | 2024-06 | 59.1 | 57.9 | 35.4 | 9.5 | 40.4 | 22.1 | 21.1 | 35.3 | 51.0 | 37.4 | 47.3 | 35.0 | 27.1 | 34.5 | 36.5 | |

| SD-3.5-Medium | 2024-10 | 59.5 | 60.6 | 33.1 | 10.6 | 41.0 | 19.9 | 20.5 | 33.5 | 53.7 | 33.4 | 52.7 | 35.6 | 22.0 | 33.9 | 36.3 | |

| SD-3.5-Large | 2024-10 | 57.5 | 60.0 | 32.9 | 15.6 | 41.5 | 22.5 | 22.4 | 34.2 | 52.5 | 35.5 | 53.0 | 42.3 | 25.2 | 35.9 | 37.8 | |

| FLUX.1-schnell | 2024-08 | 65.4 | 63.1 | 47.6 | 22.4 | 49.6 | 25.0 | 25.1 | 40.9 | 64.7 | 47.6 | 54.0 | 39.6 | 22.9 | 40.0 | 43.2 | |

| FLUX.1-dev | 2024-08 | 58.6 | 60.3 | 44.1 | 31.1 | 48.6 | 24.8 | 23.0 | 36.0 | 61.8 | 42.4 | 57.2 | 36.3 | 30.3 | 39.0 | 42.2 | |

| ▸FLUX.1-Krea-dev | 2025-07 | 70.7 | 71.1 | 53.2 | 28.9 | 56.0 | 30.3 | 26.1 | 44.5 | 70.6 | 50.5 | 57.5 | 46.3 | 28.7 | 44.3 | 48.2 | |

| FLUX.2-dev | 2025-11 | 89.5 | 83.4 | 72.7 | 93.3 | 84.7 | 48.4 | 33.5 | 53.4 | 83.1 | 62.3 | 64.3 | 62.1 | 26.9 | 54.2 | 64.4 | |

| FLUX.2-klein-4B | 2026-01 | 80.0 | 77.1 | 63.5 | 53.0 | 68.4 | 34.6 | 30.5 | 49.5 | 77.3 | 58.1 | 58.1 | 48.8 | 25.2 | 47.7 | 54.6 | |

| FLUX.2-klein-9B | 2026-01 | 85.8 | 80.6 | 68.0 | 77.7 | 78.0 | 43.4 | 32.9 | 52.4 | 80.6 | 60.2 | 60.8 | 60.4 | 25.1 | 52.0 | 60.6 | |

| PixArt-α | 2023-11 | 40.2 | 42.2 | 14.2 | 3.3 | 25.0 | 11.6 | 11.6 | 21.1 | 30.4 | 22.6 | 44.4 | 26.7 | 20.9 | 23.7 | 24.1 | |

| PixArt-Σ | 2024-03 | 47.2 | 49.7 | 23.8 | 2.8 | 30.9 | 14.7 | 18.3 | 26.7 | 39.2 | 25.7 | 44.9 | 33.9 | 24.3 | 28.5 | 29.3 | |

| HiDream-I1 | 2025-04 | 62.5 | 62.0 | 42.9 | 33.9 | 50.3 | 34.2 | 24.5 | 40.9 | 53.2 | 34.2 | 50.3 | 46.1 | 31.7 | 39.4 | 43.0 | |

| ▸Qwen-Image | 2025-08 | 81.4 | 79.6 | 65.6 | 85.5 | 78.0 | 41.1 | 32.2 | 48.2 | 75.1 | 56.5 | 53.3 | 61.9 | 26.4 | 49.3 | 58.9 | |

| Qwen-Image-2512 | 2025-12 | 88.5 | 82.5 | 71.9 | 91.9 | 83.7 | 42.7 | 34.6 | 53.6 | 82.0 | 62.0 | 57.2 | 60.3 | 21.7 | 51.7 | 62.4 | |

| Z-Image-Turbo | 2025-11 | 79.5 | 72.2 | 62.7 | 83.9 | 74.6 | 36.9 | 28.8 | 48.7 | 74.0 | 56.2 | 55.8 | 52.0 | 26.0 | 47.3 | 56.4 | |

| LongCat-Image | 2025-12 | 81.4 | 74.5 | 61.5 | 65.7 | 70.8 | 39.1 | 35.7 | 48.5 | 75.5 | 72.5 | 61.4 | 58.8 | 41.0 | 54.1 | 59.6 | |

| Autoregressive Models | |||||||||||||||||

| Infinity-8B | 2025-02 | 63.9 | 63.4 | 47.5 | 10.8 | 46.4 | 28.6 | 25.9 | 42.9 | 62.6 | 47.3 | 59.2 | 46.9 | 24.6 | 42.3 | 43.6 | |

| GoT-R1-7B | 2025-05 | 48.8 | 55.6 | 32.9 | 6.1 | 35.8 | 22.1 | 19.2 | 31.3 | 49.2 | 34.8 | 46.2 | 32.1 | 14.6 | 31.2 | 32.7 | |

| Unified Models | |||||||||||||||||

| BAGEL | 2025-05 | 64.9 | 65.2 | 45.8 | 9.7 | 46.4 | 23.4 | 21.9 | 33.0 | 51.6 | 31.2 | 50.4 | 32.4 | 29.3 | 34.1 | 38.2 | |

| BAGEL w/ Think | 2025-05 | 57.7 | 60.8 | 37.8 | 2.2 | 39.6 | 25.5 | 25.4 | 33.9 | 58.6 | 53.5 | 56.9 | 41.6 | 39.8 | 41.9 | 41.1 | |

| show-o2-1.5B | 2025-06 | 59.5 | 60.3 | 36.1 | 4.6 | 40.1 | 21.6 | 21.8 | 37.1 | 47.7 | 39.9 | 44.7 | 29.0 | 24.0 | 33.2 | 35.5 | |

| show-o2-7B | 2025-06 | 59.4 | 61.8 | 38.1 | 2.2 | 40.4 | 23.2 | 23.1 | 37.5 | 51.6 | 40.9 | 47.2 | 32.2 | 21.3 | 34.6 | 36.5 | |

| Janus-Pro-1B | 2025-01 | 51.0 | 54.5 | 33.8 | 2.9 | 35.5 | 12.9 | 18.1 | 24.7 | 13.4 | 7.1 | 15.1 | 6.7 | 6.4 | 13.0 | 20.5 | |

| Janus-Pro-7B | 2025-01 | 54.4 | 59.3 | 40.9 | 7.5 | 40.5 | 19.8 | 20.9 | 34.6 | 22.4 | 11.5 | 30.4 | 8.7 | 9.8 | 19.8 | 26.7 | |

| BLIP3o-4B | 2025-05 | 45.6 | 47.5 | 20.3 | 0.5 | 28.5 | 14.2 | 17.7 | 26.3 | 36.3 | 37.6 | 37.8 | 31.3 | 24.8 | 28.2 | 28.3 | |

| BLIP3o-8B | 2025-05 | 46.2 | 50.4 | 24.1 | 0.5 | 30.3 | 14.8 | 20.7 | 28.3 | 39.6 | 43.4 | 51.0 | 35.9 | 20.4 | 31.8 | 31.3 | |

| OmniGen2-7B | 2025-06 | 67.9 | 64.1 | 48.3 | 19.2 | 49.9 | 24.7 | 23.2 | 43.3 | 63.1 | 46.1 | 54.2 | 36.5 | 24.1 | 39.4 | 42.9 | |

| HunyuanImage-3.0 | 2025-09 | 84.9 | 81.2 | 63.7 | 85.7 | 78.9 | 39.6 | 32.8 | 51.4 | 72.4 | 54.1 | 54.1 | 57.0 | 27.7 | 48.6 | 58.7 | |

| Closed-Source Models | |||||||||||||||||

| Seedream 3.0 | 2025-04 | 79.9 | 78.0 | 63.7 | 47.6 | 67.3 | 36.8 | 33.6 | 50.3 | 75.1 | 54.9 | 61.7 | 59.1 | 31.2 | 50.3 | 56.0 | |

| Seedream 4.0 | 2025-09 | 91.5 | 84.5 | 75.0 | 93.6 | 86.1 | 76.3 | 54.1 | 60.7 | 85.8 | 85.9 | 77.1 | 71.6 | 47.9 | 69.9 | 75.3 | |

| Seedream 4.5 | 2025-12 | 92.0 | 87.1 | 77.7 | 96.2 | 88.3 | 71.1 | 58.3 | 64.5 | 87.6 | 87.9 | 76.9 | 73.5 | 50.9 | 71.3 | 77.0 | |

| Gemini 2.0 Flash | 2025-03 | 67.5 | 68.5 | 49.7 | 62.9 | 62.1 | 39.3 | 39.7 | 47.9 | 69.3 | 58.5 | 63.7 | 51.2 | 39.9 | 51.2 | 54.8 | |

| ▸Nano Banana | 2025-08 | 85.7 | 77.9 | 72.6 | 86.3 | 80.6 | 64.5 | 64.9 | 67.1 | 85.2 | 84.1 | 83.1 | 71.3 | 68.7 | 73.6 | 75.9 | |

| Nano Banana Pro | 2025-11 | 91.9 | 85.5 | 83.4 | 98.1 | 89.7 | 90.8 | 73.6 | 77.8 | 90.7 | 90.4 | 84.2 | 77.0 | 76.7 | 82.7 | 85.0 | |

| Imagen 4 | 2025-06 | 82.8 | 74.3 | 66.3 | 90.2 | 78.4 | 44.5 | 51.8 | 56.8 | 82.8 | 79.5 | 73.3 | 72.8 | 65.3 | 65.9 | 70.0 | |

| Imagen 4 Ultra | 2025-06 | 90.0 | 80.0 | 73.2 | 86.2 | 82.4 | 63.6 | 62.4 | 66.1 | 88.5 | 82.8 | 83.0 | 76.3 | 60.7 | 72.9 | 76.1 | |

| ▸GPT-Image | 2025-04 | 84.1 | 75.9 | 72.7 | 86.4 | 79.8 | 59.0 | 54.8 | 65.6 | 87.3 | 76.5 | 82.0 | 70.9 | 56.1 | 69.0 | 72.6 | |

| GPT-Image-1.5 | 2025-12 | 87.0 | 83.4 | 76.9 | 94.7 | 85.5 | 62.2 | 67.0 | 72.2 | 90.8 | 83.9 | 84.5 | 77.5 | 59.0 | 74.6 | 78.2 | |

Main results on our T2I-CoReBench assessing both composition and reasoning capabilities evaluated by Gemini 2.5 Flash. Mean denotes the mean score for each capability, and Overall summarizes the aggregated score across all dimensions. The best and second-best results are marked in bold and underline for open- and closed-models, respectively. Since most closed-source models enable Prompt Rewriting by default, we also compare the results with Prompt Rewriting enabled (blue rows, rewritten using OpenAI o3) to ensure a fair comparison between open- and closed-models.

4. Performance Landscape

Note: Circle size corresponds to parameter scale for open-source models, while closed-source models are shown as dashed circles due to unknown parameter scales.

BibTeX

@article{li2025easier,

title={Easier Painting Than Thinking: Can Text-to-Image Models Set the Stage, but Not Direct the Play?},

author={Li, Ouxiang and Wang, Yuan and Hu, Xinting and Huang, Huijuan and Chen, Rui and Ou, Jiarong and Tao, Xin and Wan, Pengfei and Qi, Xiaojuan and Feng, Fuli},

journal={arXiv preprint arXiv:2509.03516},

year={2025}

}